Hathidy

Ben Schmidt

2020-03-12

Slides:

benschmidt.org/slides/hathidy

Package:

https://github.com/HumanitiesDataAnalysis/hathidy

Extracted Features

General vision: wordcount data should be able to meet scholars where they are.

- Languages: Sometimes R, sometimes python, sometimes javascript.

- Data formats: word count tables, term-document matrices, etc.

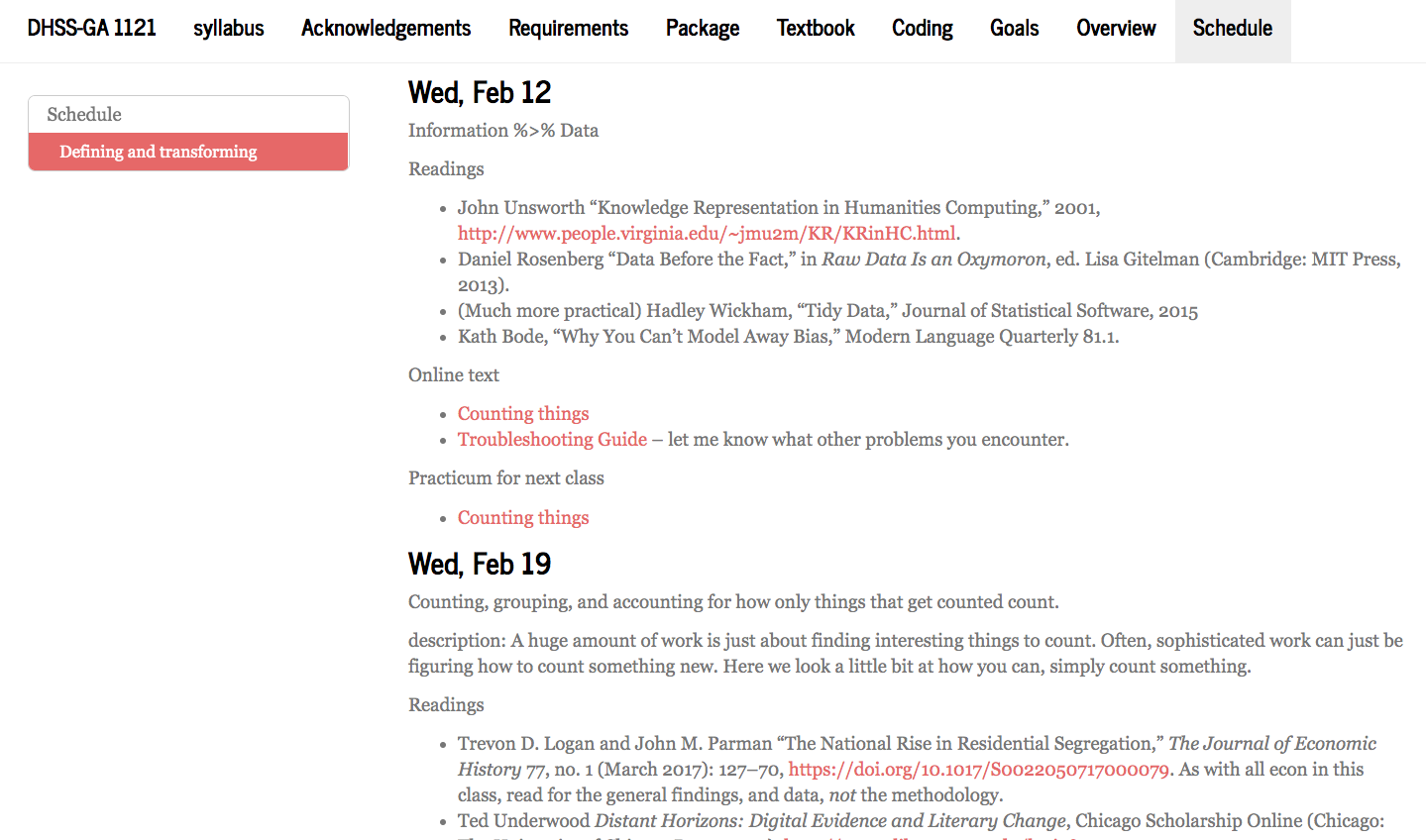

Teaching with HTRC features

- “Working with Data,” graduate digital humanities class.

- Syllabus-being-dismantled: benschmidt.org/WWD20

- Textbook-in-progress: benschmidt.org/HDA

Principle: counting, joining, and modeling are transferable skills that can be used on any data set.

So students need their own sets. Thus: 🐘

HTRC Feature Reader (python)

pandasintegration- Enables complicated transformation (casing, chunking, etc.) inside the package.

- Significant performance optimizations

- Built for iterating across thousands of texts

Hathidy (R)

tidyverseintegration- Use tidyverse and other packages (tidyverse, tidytext, etc.) for all actual analysis.

- Built for teaching, safe for research.

- Focused on fast access to token data.

- Push users towards particular formats.

HTRC Feature Reader (python)

- Pandas integration

- Enables complicated transformation (casing, chunking, etc.) inside the package.

- Significant performance optimizations

- Built for iterating across thousands of texts

Hathidy (R)

- Follow the established universe of R packages (tidyverse, tidytext, etc.) for all actual analysis.

- Built for teaching, but save for research.

- Focused on fast access to token data.

- Strong push towards particular storage formats.

Core principles for working with extracted features.

- You should only ever refer to Hathi books in code by HTID, not files;

- You should keep a local copy of every feature you’ve looked at;

- Because it’s faster, page-level token data should be cached in csv or parquet.

Fast access means fast to code and fast to load.

Uniform model; you must access by HTID. The user is only dimly aware there are files involved.

But there are; and they are cached on disk.

Currently using pairtree and csv; but that is likely to change to flat and parquet.